Contents tagged with General

-

Applying algorithms to real-life problem

Today I encountered a real-life problem. My wife got some fresh pecan. After we cracked the pecan and took the large pieces, there are some smaller pieces of pecan and shell fragments left in the bowl. My wife asked kids to separate the pecan from the shell. There were three proposals:

1) Pick the pecan out. The trouble is that we have more pecan pieces than shell pieces. The time for this approach is proportional to number of pecan pieces.

2) Pick the shell out. It is not clear whether the time would be small because we still need to scan (with our eye) among pecans to find shell. In additional, it is hard to be sure that we are free of shells at the end.

3) Pour them in water and hope one is heavier than water and the other is lighter. This idea was never tested.

Then I proposed the 4th approach: grab a few pieces in my palm and apply 1) or 2), which ever way is faster. This works out really well.

The key is that after I scan the pecan and shells in my palm I never scan the same pieces again. The fast algorithms, whether searching a word in a string, or sorting a list, or finding the quickest time when traveling from point A to point B, are all by finding a smart way to minimize the operations on the same data.

-

Theory and Practice of Database and Data Analysis (4) – Capturing additional information on relations

So far in this series, I have been talking about traversing related data by navigating down the referential constraints. The physical database makes no distinction about the nature of the foreign key, whether it is parent-child relationship or another type of reference. In logical ER modeling, we often capture more information. So in this blog, I will explore the idea of capturing additional information so that we can build more powerful tools.

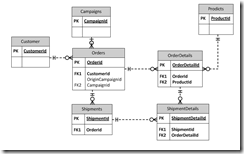

Let me further refrain myself by using the fictitious e-commerce company example introduced in the part 1 of this series: We have an Orders table to capture the order header and OrderDetails table to capture the line items. An order must be placed by a customer, and may or may not originates from marketing campaign. An order may be fulfilled by one or more shipments. Each shipment would ship order line items in part or in full. So we have an ER diagram likes the following:

In the ER diagram, there is a clear parent-child relationship between Orders and OrderDetails and between Shipdments and ShipmentDetails. If we send the data in an XML file, each OrderDetail element would be a child of an Order element and each ShipmentDetail element would be a child element of an Shipment element. However, it appears that ShipmentDetails table has two parents: Shipments and OrderDetails. We will pick the parent by the importance of the relationship so we will pick Shipments as the parent. We then need to establish an additional mechanism to store the cross references between ShipmentDetails and OrderDetails in our XML file.

Let us examine other relations. Customer is a mandatory attribute for the Order table and Product is a mandatory attribute to the OrderDetails. Campaign is an optional attribute of the Orders. We can further classify as Products as system table and Customers as data table.

So we have classified the relations in our simple example into several categories: parent-child, cross-reference, mandatory attribute to system table, mandatory attribute to data table and optional attribute.

Now let us use an example to see how such classification can help us. Supposing we are building a tool to export all data related to a transaction from our production database into an XML and then import into our development database. The ID of an element could change from one system to another. That is not a problem with parent-child as we will get a new key when we insert the parent and we can propagate that down to the children. However, we need to take extra care to ensure that the cross reference is maintained.

We do not have to worry about the system table but we do have to worry about the data table. Our development database may or may not have the same customer. So from our classification, we can generically determine that we need to get a copy of mandatory reference to a data table and its children to check for existence in the destination database.

Besides categorization, other information that we like to capture are one-to-one relationships, some of them can be inferred; if a referential constraint references to primary key in both tables, it has a one-to-one relationship. We also like to capture hidden relationship; they are real references but cannot be enforced by foreign key constraint in Sql Server. For example, some tables contain an ID column that can store multiple types of IDs; a separate column is used to indicate the type of ID stored in the ID column.

With human input of extra information beyond those we can extracted from physical databases, we shall be able to build better tools. I will explorer some tools in upcoming blogs of this series.

-

Build a very green Windows Server

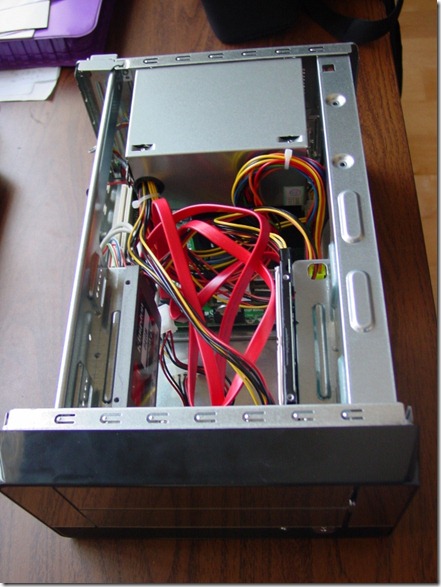

My old server consumes about 80W. Given my top tier electricity cost of $0.24/KWh, it costs me about $14 in electricity each month, and generates considerable noise and heat. Recently, I set out to build a greener server. I bought an Intel Atom Dual-Core D525MW Fan-less Motherboard

for $80 and a Apex MI-100 mITX case for $40. I also I bough an Kingston 64GB SSD for $75 after rebate. I used 2 x 2GB DDR3 SODIMM RAM and a Toshiba 250GB notebook drive scratched from my recent laptop upgrade. So my total out-of-pocket expense is just under $200 before tax. If I include the cost of retired parts, the hardware cost is about $300. I cloned my Windows 2003 Server OS from my old server into my new server. Although Intel does not supports server OS from desktop boards, I was actually able to install all the necessary drivers from the Intel CD despite the setup program reported installation failure on 3 out of 4 drivers. The new server is barely audible; it is quieter than a outlet timer. Like any machine powered by an SSD drive, it boots up in seconds.

So how much power does it consume? My Kill-A-Watt meter tells me that in consumes just under 30 watt at idle. To put that in perspective, my previous Dish Network Receiver and my current Time Warner HD Receiver each consumes about 20 watt at idle. It is quite adequate as my server.

-

Relating software refactoring to algebra factoring

Refactoring is a fairly common word in software development. Most people in the software industry have some ideas of it. However, it was not clear to me where the term comes from and how it relates to the factor we learnt in algebra. A Bing search indicates that the word Refactoring origin from Opdyke’s Ph.D. thesis used for restructuring in object oriented software, but is not clear how it relates to the factor we normally know. So I attempt to make some sense out of it after the fact.

In algebra, we know a factor is part that participated in a multiplication. For example, if C = A * B, we call A and B factors of C.

Factors are often used to simplify computation. Taking the reverse distribution identity of multiplication as an example, C * A + C * B can be rewritten as C * (A + B). This will improve computational efficiency if multiplication is far more expensive than addition. C in this case is called the common factor in the two terms, C * A and C * B. If there are no common factors between A and B, we call C the greatest common factor (GCF) the two terms.

To identify the GCF of two numbers, we usually find all the prime factors of numbers and include the greatest common count of those prime factors. For example, if we want to find the GCF of 72 and 120, we would like 72 as 23 * 32 and 120 as 23 * 31 * 51. Then the GCF would be 23 * 31 = 24. (Note that in real computer program we usually calculate GCF using Euclid’s algorithm).

Now let us see an example of analogy to algebra factoring in Boolean algebra. There is an identity (A and B) or (A and C) = A and (B or C). If we relate “and” to * and “or” to +, the above identity would be very similar to distribution identity in algebra.

In software refactoring, we usually identify the longest common sequence that is duplicated (let me set parameterization aside for simplicity) and try to reuse the sequence as a single unit. How do we find an analogy to algebra? Let us consider an instruction in a sequence as a function that take the state of computer as input and return the modified state. Then a sequence of instructions can be written as f1(f2(f3(…fn()))). If we replace the () symbol with *. Then we can write above as f1 * f2 * f3 * … * fn. Now we use + to denote any situation that we need branching. For example, we could write if (cond) { A } then { B } as A + B. Now let use apply this notation to a more complex example:

if (cond) { A; B; C; } else { D; B; E; }A to E above are block of code. So using the above notation, we can rewrite it as A * B * C + D * B * E. Now if we try to factor this expression, we get (A + D) * B * (C + E) (Note that this is a bit different to normal Algebra factoring). Now we expand the above expression into code, we would get something like:

if (cond) { A; } else { D; } B; if (cond) { C; } else { E; }That is in fact one of fairly common types of refactoring.